You may often hear developers say “But, it works on my machine!” It’s so common that it’s a meme in the dev world.

This is because as a code becomes more complex, local developer environments drift further away from the server environment setup. Their local machines end up with libraries and programs that may not be available on the servers — leading to code with unfulfilled dependencies on the server.

A containerization solution was needed to standardize environments across devices, and et voilà, Docker was born.

Docker changed the way applications are built, shipped, and run. The word “Docker” has become synonymous with efficiency, portability, and scalability.

In this guide, we’ll better understand what Docker is, how it works, and how it could benefit you in your day-to-day development workflows.

Let’s get started, shall we?

What Is Docker?

At its core, Docker is an open-source platform that enables developers to automate the deployment, scaling, and management of applications using containerization technology. It provides a standardized way to package software along with its dependencies into a single unit called a container.

Containers are lightweight, self-contained environments that contain everything an application needs to run: including the operating system, code, runtime, system tools, libraries, and settings. They provide a consistent and reproducible way to deploy applications across different environments, from development to testing to production.

Containerization

Containerization is a technique that allows applications to be packaged and run in isolated containers. Containerization offers several advantages over traditional deployment methods such as:

- Consistency: With containers, your applications run consistently across different environments, eliminating compatibility issues and reducing the risk of runtime errors.

- Efficiency: They’re resource-efficient compared to virtual machines because they share the host system’s kernel and resources, resulting in faster startup times and lower overhead.

- Scalability: You can easily replicate and scale containers horizontally, allowing applications to handle increased workloads by distributing them across multiple containers.

- Portability: The application can be moved easily between development, testing, and production environments without requiring modifications.

Docker’s Role In Containerization

However, before Docker came into the picture, containerization was complex and required deep technical expertise to implement effectively. Docker introduced a standardized format for packaging applications and their dependencies into portable container images.

Developers can easily define the application’s runtime environment, including the operating system, libraries, and configuration files, using a declarative language called Dockerfile. This Dockerfile is a blueprint for creating Docker images, which are immutable snapshots of the application and its dependencies.

Once a Docker image is created, it can be easily shared and deployed across different environments. Docker provides a centralized online repository called Docker Hub, where developers can store and distribute their container images, fostering collaboration and reusability.

Docker also introduced a command-line interface (CLI) and a set of APIs that simplify the process of building, running, and managing containers. Developers can use simple commands to create containers from images, start and stop containers, and interact with containerized applications.

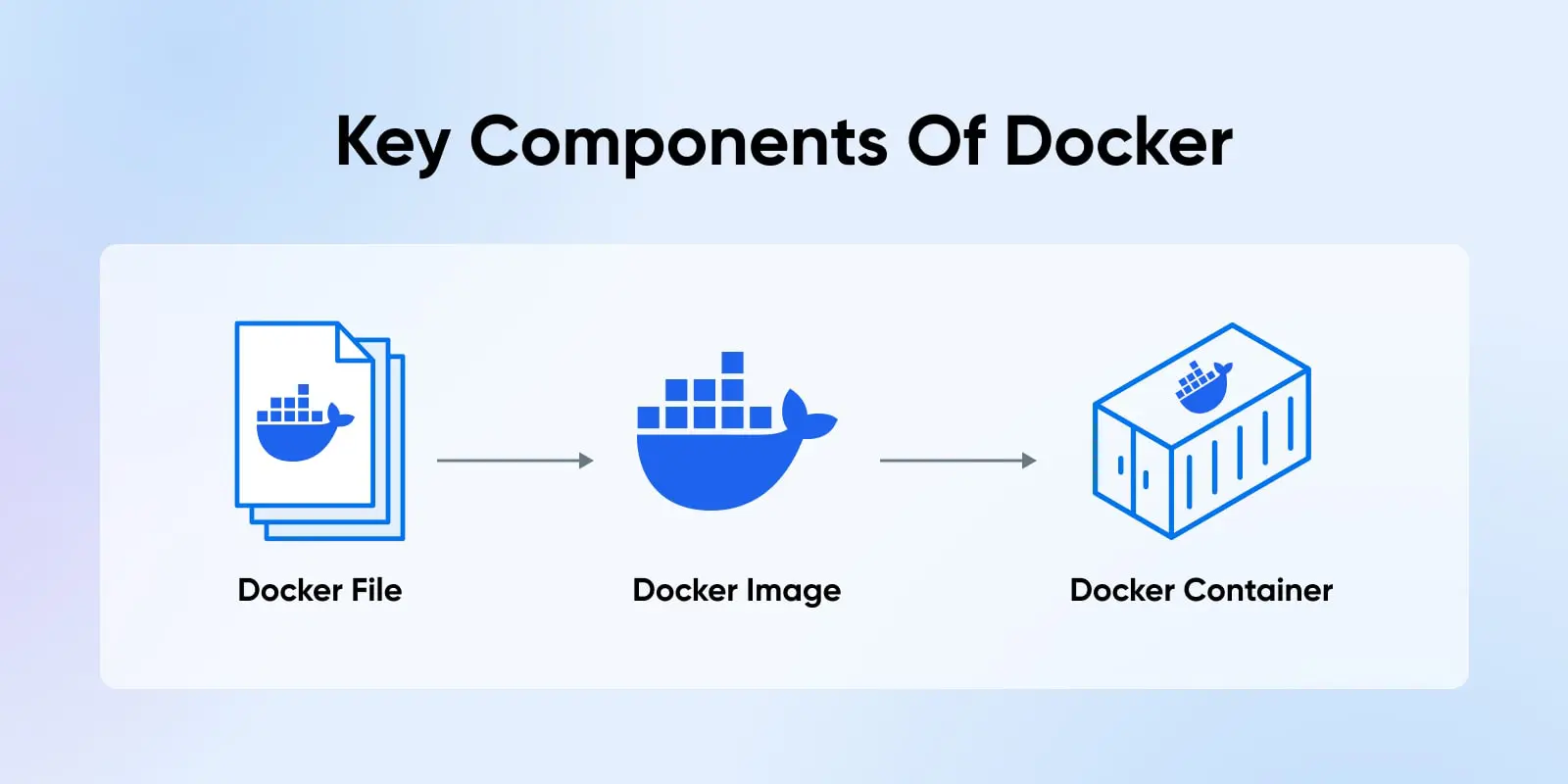

Key Components Of Docker

Now, let’s examine the key components of Docker to better understand the underlying architecture of this containerization technology.

1. Docker Containers

As you’ve probably guessed, containers are at the heart of Docker. Containers created with Docker are lightweight, standalone, and executable packages that include everything needed to run a piece of software. Containers are isolated from each other and the host system, ensuring they don’t interfere with each other’s operations.

Think of containers as individual apartments in a high-rise building. Each apartment has its own space, utilities, and resources, but they all share the same building infrastructure.

2. Docker Images

If containers are apartments, then Docker images are the blueprints. An image is a read-only template that contains a set of instructions for creating a container. It includes the application code, runtime, libraries, environment variables, and configuration files. You can find a lot of pre-built Docker images on the Docker Hub that we previously discussed.

Images are built using a series of layers. Each layer represents a change to the image, such as adding a file or installing a package. When you update an image, only the changed layers need to be rebuilt, making the process efficient and fast.

3. Dockerfiles

Dockerfiles are detailed instructions for creating Docker images.

A Dockerfile is a plain text file that contains a series of instructions on how to build a Docker image. It specifies the base image to start with, the commands to run, the files to copy, and the environment variables to set.

Here’s a simple Dockerfile example:

FROM ubuntu:latest

RUN apt update && apt install -y python

COPY app.py /app/

WORKDIR /app

CMD ["python", "app.py"]In this example, we start with the latest Ubuntu image, install Python, copy the app.py file into the /app directory, set the working directory to /app, and specify the command to run when the container starts.

What Are The Benefits Of Using Docker?

Docker offers numerous benefits that make it a popular choice among developers and organizations:

Simplified Application Development

Development is simple. By packaging applications and their dependencies into containers, Docker allows developers to work on different parts of an application independently. This ensures that everything runs smoothly together. Testing is also simplified, and issues can be caught earlier in the development cycle.

Enhanced Portability

Applications become more portable. Containers can run consistently on different environments, whether on a developer’s local machine, a testing environment, or a production server. This eliminates compatibility issues and makes it easier to deploy applications to different platforms.

Improved Efficiency

Docker improves efficiency. Containers are lightweight and start up quickly, making them more efficient than traditional virtual machines. This means you can get more out of your resources and deploy applications faster.

Better Scalability

Scaling applications is easier with Docker. You can easily run multiple containers across different hosts to handle increased traffic or workload. In this way, scaling applications is much easier.

Streamlined Testing And Deployment

Docker streamlines testing and deployment. Docker images can be easily versioned and tracked, making it easier to manage changes and roll back if needed. Docker also works well with continuous integration and delivery (CI/CD) pipelines, which automate the build and deployment process.

What Are Some Use Cases For Docker?

Docker is widely adopted across various industries and use cases. Let’s explore some common scenarios where Docker shines.

Microservices Architecture

Docker is an excellent fit for building and deploying microservices-based applications. Microservices are small, independently deployable services that work together to form a larger application. Each microservice can be packaged into a separate Docker container, empowering independent development, deployment, and scaling.

For example, an e-commerce application can be broken down into microservices such as a product catalog service, a shopping cart service, an order processing service, and a payment service. Each of these services can be developed and deployed independently using Docker containers, making the overall application much more modular and maintainable.

Continuous Integration And Delivery (CI/CD)

Docker plays an important role in enabling continuous integration and delivery (CI/CD) practices. CI/CD is a software development approach that emphasizes frequent integration, automated testing, and continuous deployment of code changes.

With Docker, you can create a consistent and reproducible environment for building, testing, and deploying applications. You can define the entire application stack, including dependencies and configurations, in a Dockerfile. This Dockerfile can then be version-controlled and used as part of your CI/CD pipeline.

For example, you can set up a Jenkins pipeline that automatically builds a Docker image whenever code changes are pushed to a Git repository. The pipeline can then run automated tests against the Docker container and, if the tests pass, deploy the container to a production environment.

Local Development Environments

Docker is also widely used for creating local development environments. Instead of manually setting up and configuring the development environment on each developer’s machine, you can use Docker to provide a consistent and isolated environment.

Say, you’re developing a web application that requires a specific version of a database and a web server. You can define these dependencies in a Docker Compose file. Developers can then use Docker Compose to spin up the entire development environment with a single command, so everyone has the same setup.

The idea is to eliminate manual setup and configuration, reduce the risk of environment-specific issues, and allow developers to focus on writing code rather than dealing with environment inconsistencies.

Application Modernization

Docker is a valuable tool for modernizing legacy applications. Many organizations have older applications that are difficult to maintain and deploy due to their monolithic architecture and complex dependencies.

With Docker, you can containerize legacy applications and break them down into smaller, more manageable components. You can start by identifying the different services within the monolithic application and packaging them into separate Docker containers. This way, you can gradually modernize the application architecture without a complete rewrite.

Containerizing legacy applications also makes it easier to deploy and scale. Instead of dealing with complex installation procedures and dependency conflicts, you simply deploy the containerized application to any environment that supports Docker.

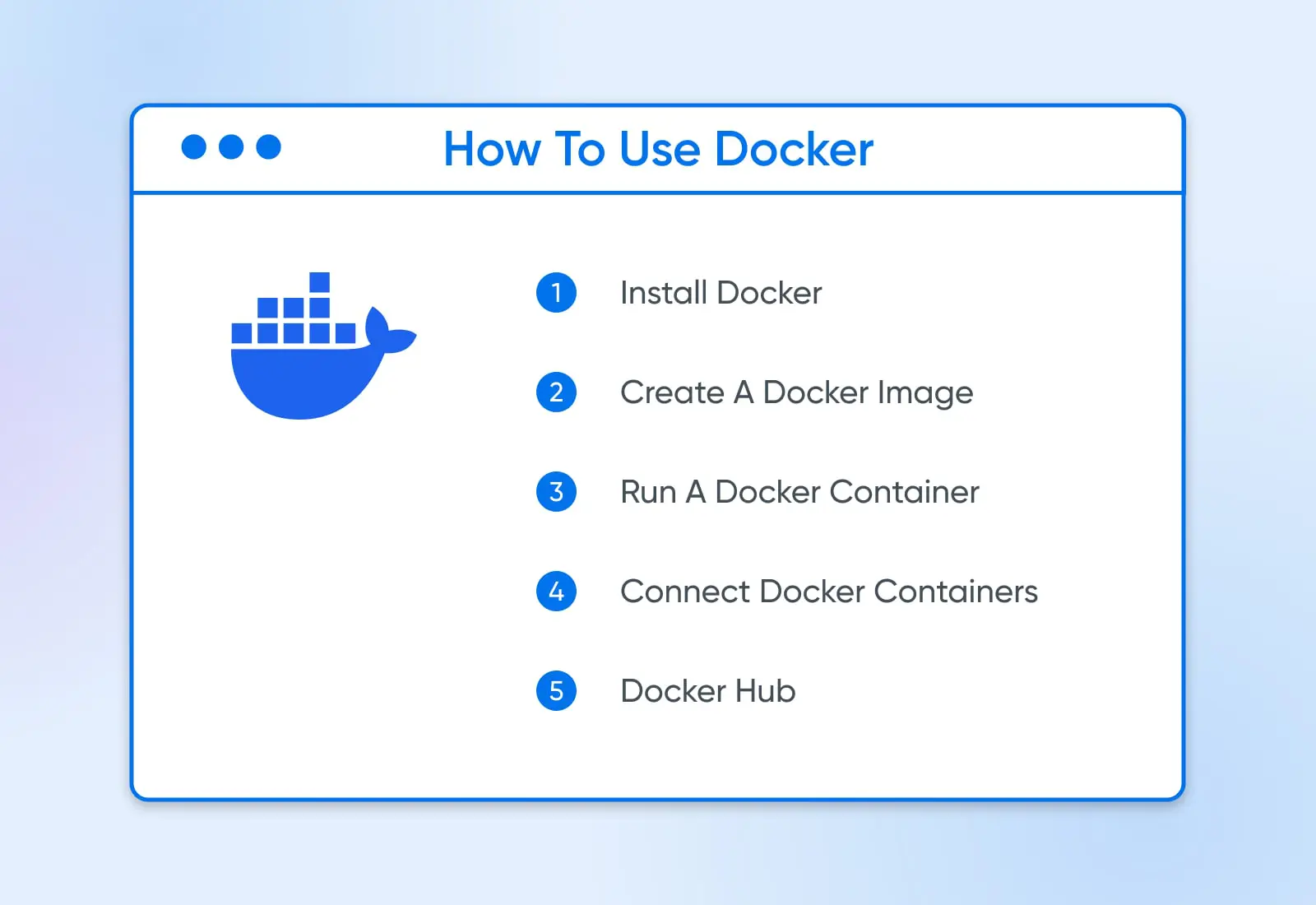

How To Use Docker

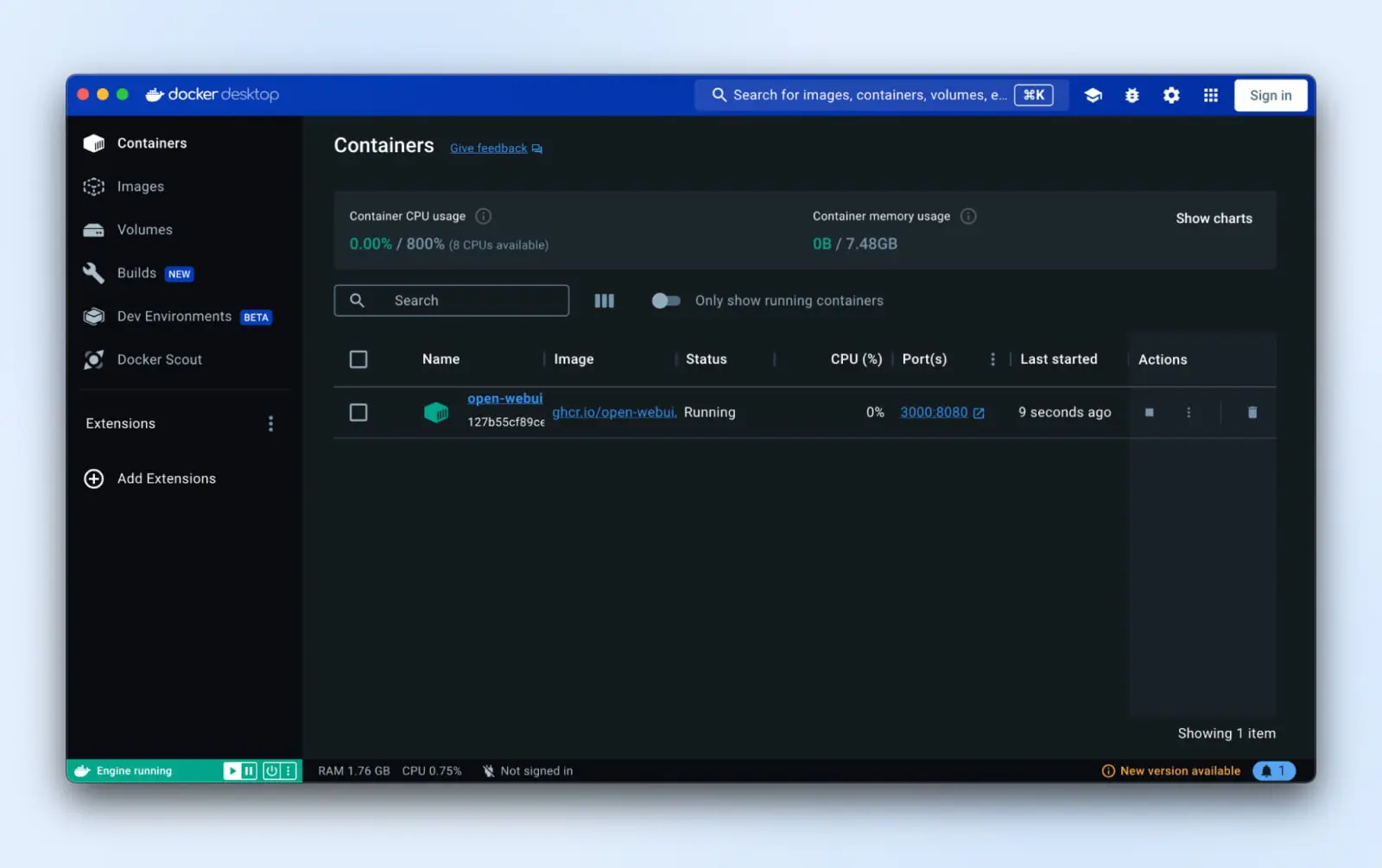

Now that we know the key components, let’s explore how Docker works:

1. Install Docker

To install Docker, visit the official Docker website and download the appropriate installer for your operating system. Docker provides installers for Windows, macOS, and various Linux distributions.

Once you have downloaded the installer, follow Docker’s installation instructions. The installation process is straightforward and shouldn’t take you very long.

2. Creating and Using Docker Images

Before creating your own Docker image, consider whether a pre-built image already meets your needs. Many common applications and services have official images available on Docker Hub, GitHub Container Registry, or other container registries. Using a pre-built image can save you time and effort.

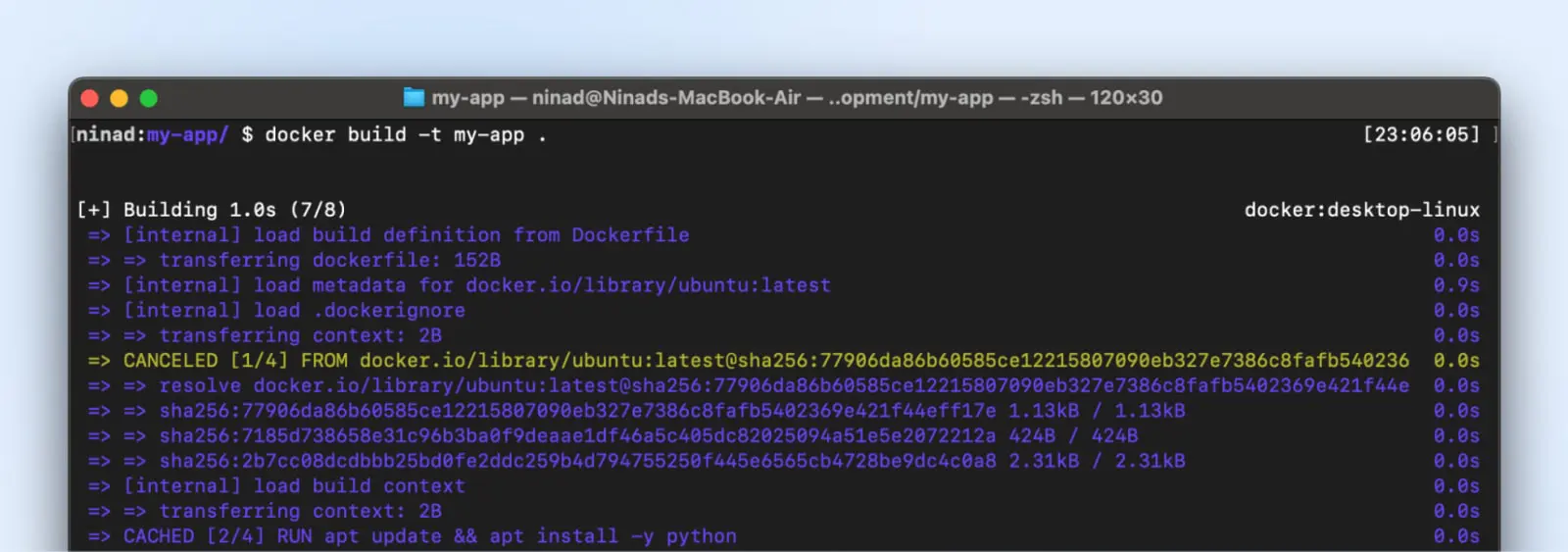

If you decide to create a custom Docker image, you’ll need a Dockerfile. This file defines the steps to build the image according to your requirements. Here’s how to proceed:

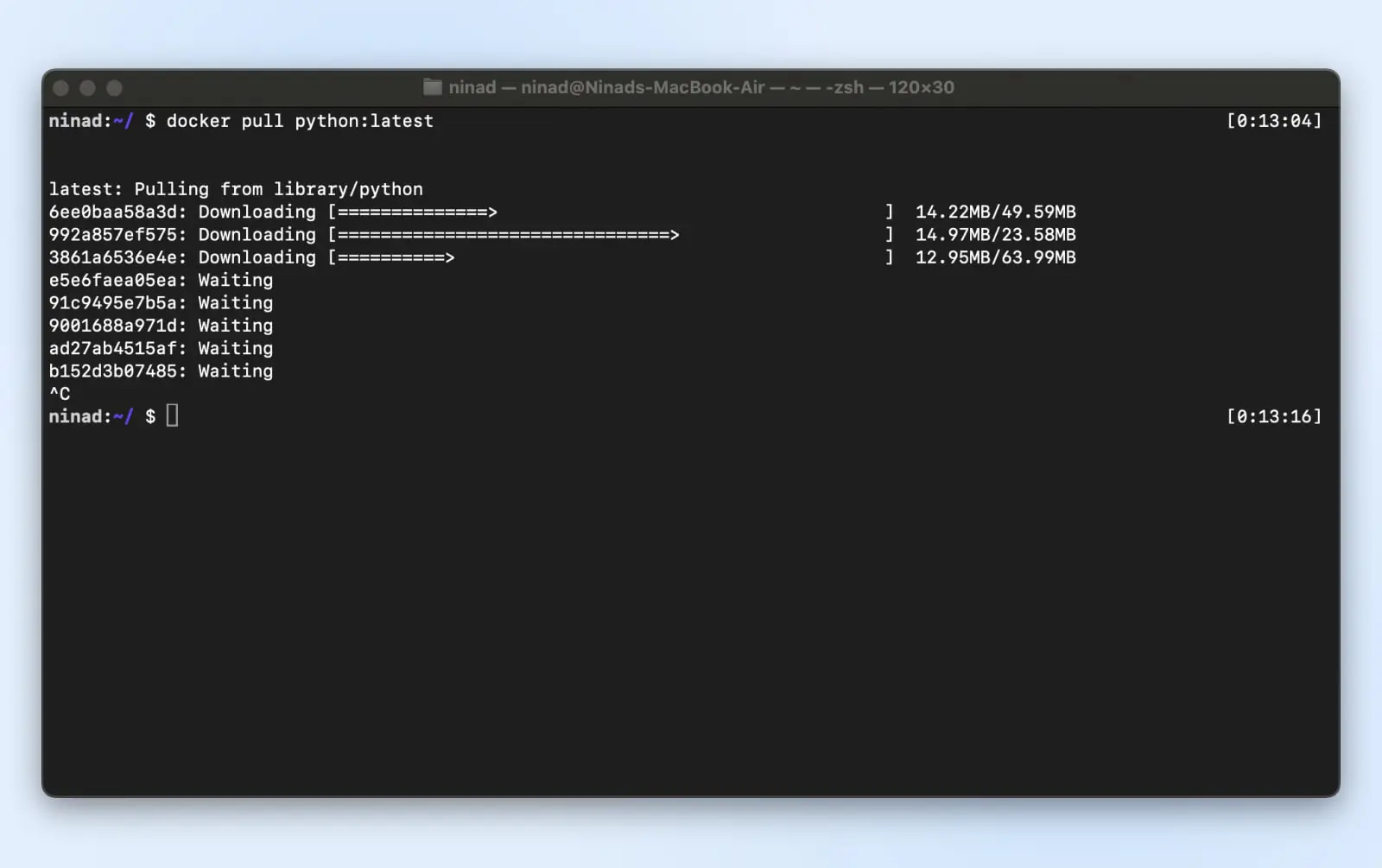

- Using Pre-Built Images: Search for an existing image on Docker Hub, GitHub Container Registry, or within your organization’s private repository. You can pull an image with the command

docker pull <image_name>:<tag>, replacing<image_name>and<tag>with the specific name and version of the desired image. - Creating Your Own Image: If a pre-built image doesn’t suit your needs, you can create your own. First, write a Dockerfile based on your requirements. Then, build your image with the following command:

docker build -t my-app .

This command tells Docker to build an image tagged as my-app using the current directory (.) as the build context. It will then be available in your docker environment to use for container creation.

3. Running A Docker Container

Once you have a Docker image, you can use it to create and run containers. To run a container, use the docker run command followed by the image name and any additional options.

For example, to run a container based on the my-app image we built earlier, you can use the following command:

docker run -p 8080:80 my-appThis command starts a container based on the my-app image and maps port 8080 on the host system to port 80 inside the container.

4. Communicating Between Containers

Containers are isolated by default, but sometimes you need them to communicate with each other. Docker provides networking capabilities that allow containers to communicate securely.

You can create a Docker network using the Docker network create command. Then, connect containers to that network. Containers on the same network can communicate with each other using their container names as hostnames.

For example, let’s say you have two containers: a web application and a database. You can create a network called my-network and connect both containers to it:

docker network create my-network

docker run --name web-app --network my-network my-app

docker run --name database --network my-network my-databaseNow, the web app container can communicate with the database container using the hostname database.

5. Basic Docker Commands

Here are some basic Docker commands that you’ll frequently use:

docker pull: Pulls the specified Docker image from the Docker Hubdocker run: Runs a container based on a specified imagedocker build: Builds a Docker image from a Dockerfiledocker ps: Lists all running containersdocker images: Lists all available Docker imagesdocker stop: Stops a running containerdocker rm: Removes a stopped containerdocker rmi: Removes a Docker image

These are just a few examples of the many Docker commands available. Refer to the Docker documentation for a comprehensive list of commands and how to use them.

6. Docker Hub

Docker Hub is a public registry hosting a vast collection of images. It serves as a central repository where developers can find and share Docker images.

You can browse the Docker Hub to find pre-built images for various applications, frameworks, and operating systems. These images can be used as a starting point for your applications or as a reference for creating your Dockerfiles.

To use an image from Docker Hub, simply use the docker pull command followed by the image name. For example, to pull the latest official Python image, you can run:

docker pull python:latest

This command downloads the Python image from Docker Hub and makes it available for use on your local system.

7. Mastering Docker Compose: Streamline Your Development

As you continue to explore and integrate Docker into your development workflow, it’s time to introduce a powerful tool in the Docker ecosystem: Docker Compose. Docker Compose simplifies the management of multi-container Docker applications, allowing you to define and run your software stack using a simple YAML file.

What is Docker Compose?

Docker Compose is a tool designed to help developers and system administrators orchestrate multiple Docker containers as a single service. Instead of manually launching each container and setting up networks and volumes via the command line, Docker Compose lets you define your entire stack configurations in a single, easy-to-read file named docker-compose.yml.

Key Benefits of Docker Compose:

- Simplified Configuration: Define your Docker environment in a YAML file, specifying services, networks, and volumes in a clear and concise manner.

- Ease of Use: With a single command, you can start, stop, and rebuild services, streamlining your development and deployment processes.

- Consistency Across Environments: Docker Compose ensures your Docker containers and services run the same way in development, testing, and production environments, reducing surprises during deployments.

- Development Efficiency: Focus more on building your applications rather than worrying about the underlying infrastructure. Docker Compose manages the orchestration and networking of your containers so you can concentrate on coding.

Using Docker Compose:

- Define Your App’s Environment: Create a

docker-compose.ymlfile at the root of your project directory. In this file, you’ll define the services that make up your application, so they can be run together in an isolated environment. - Run Your Services: With the

docker-compose upcommand, Docker Compose will start and run your entire app. If it’s the first time running the command or your Dockerfile has changed, Docker Compose automatically builds your app, pulling the necessary images and creating your defined services. - Scale and Manage: Easily scale your application by running multiple instances of a service. Use Docker Compose commands to manage your application lifecycle, view the status of running services, stream log output, and run one-off commands on your services.

Integrating Docker Compose into your development practices not only optimizes your workflow but also aligns your team’s development environments closely. This alignment is crucial for reducing “it works on my machine” issues and enhancing overall productivity.

Embrace Docker Compose to streamline your Docker workflows and elevate your development practices. With Docker Compose, you’re not just coding; you’re composing the future of your applications with precision and ease.

Dockerize Your Way To Dev Success With DreamCompute

We’ve journeyed through the transformative world of Docker, uncovering how it elegantly solves the infamous “But, it works on my machine!” dilemma and delving into its myriad benefits and applications. Docker’s containerization prowess ensures your projects run seamlessly and consistently across any environment, liberating you from the all-too-common frustrations of environmental discrepancies and dependency dilemmas.

Docker empowers you to transcend the common woes of code behaving unpredictably across different machines. It allows you to dedicate your energy to what you excel at—crafting remarkable code and developing stellar applications.

For both veteran developers and those just embarking on their coding odyssey, Docker represents an indispensable tool in your development toolkit. Think of it as your reliable ally, simplifying your development process and bolstering the resilience of your applications.

As you delve deeper into Docker’s expansive ecosystem and engage with its vibrant community, you’ll discover endless opportunities to harness Docker’s capabilities and refine your development practices.

Why not elevate your Docker experience by hosting your applications on DreamHost’s DreamCompute? DreamCompute offers a flexible, secure, and high-performance environment tailored for running Docker containers. It’s the perfect platform to ensure that your Dockerized applications thrive, backed by robust infrastructure and seamless scalability.

Embark on your Docker adventures with DreamCompute by your side. Build, ship, and run your applications with unparalleled confidence, supported by the comprehensive capabilities of Docker and the solid foundation of DreamCompute.

DreamObjects is an inexpensive object storage service great for hosting files, storing backups, and web app development.

Try It Free for 30 Days